Most Marketing Workflows Aren’t Built for AI Decisioning. Here’s How Marketers Can Redesign Them.

Marketing doesn’t just need better data or AI - it needs better workflows. Here’s how to re-engineer marketing processes for adaptive, context-driven decisioning - An Experimental Marketer Framework

I have been a Martech Square reader for a while and I was asked to collaborate with an article. I was stoked, obviously! Not only I was invited, I was also given carte blanche by Pawan Verna; I could cover any Martech related topic I wished!

Then came my research era: (re) reading Martech Square's post, checking how I could riff out of their content and presented some topics that could help Marketers working with Martech. I finally closed on AI Decisioning, a topic I had already cover in past as well.

Pawan has written some interesting posts on the subject, and the one about the need to fix marketing workflows before AI decisioning can work was the one I used to create my collab article, which you will read below.

As always, I look forward to hearing back from you, just reply to this email and I will answer!

________________________

Your marketing automation platform just generated 10,000 personalized emails in the time it took you to read this sentence. Those emails will get scheduled, sent, and reported on with remarkable efficiency.

But what no dashboard may be telling you is that you may be executing bad workflows more quickly. When marketing organizations treat agentic AI as a productivity booster (as a way to do existing work faster), they’re optimizing for speed within a system designed for human decision-making, manual coordination, and batch-and-blast campaign logic. Agentic AI doesn’t just make your current workflows more efficient. It can fundamentally restructure who should be making decisions, what those decisions are based on, and how work gets organized in the first place.

This Martech Square article has rightly argued that you need to fix your marketing workflows before deploying AI agents. I’d actually argue we need to go further: many workflows shouldn’t be fixed at all. They should be redesigned entirely around a different operating model.

The System-Level Shift Most Marketers May Miss

Most marketers (and their companies) may treat agentic AI as a productivity tool when it’s actually a fundamental restructuring force. Understanding this distinction determines whether you are optimizing obsolete workflows or building the systems that can define competitive advantage.

Sangeet Paul Choudary, author of Reshuffle: Who Wins When AI Restacks the Knowledge Economy, makes a critical distinction that most marketers overlook. In his analysis of AI transformation, he points out that when you press organizations to explain what “AI-native” or “AI-first” actually means, the answers tend to collapse into clichés: faster automation, smarter tools, agentic workflows—all task-level improvements.

Choudary argues that AI, and particularly autonomous AI agents, doesn’t just perform tasks differently. It can restructure entire systems of work by taking over planning and resource allocation capabilities. When AI is treated as just another tool to plug into your existing marketing stack, for example, you may be making the same mistake as the “steam-powered factories that initially treated electricity as just a better way to spin the same central drive shaft”.

Let me use some marketing examples to illustrate the difference:

Task-level thinking: “AI assists with audience segmentation, making my targeting more precise.”

System-level thinking: “AI agents can make real-time decisioning about what each customer should receive based on hundreds of contextual variables. This transforms segmentation from periodic strategic exercises into continuous micro-decisions.”

See the difference? In the first scenario, you are still orchestrating campaigns with AI assistance. In the second, AI is orchestrating engagement with your oversight. The human role shifts from executor to architect.

This isn’t just a semantic distinction. As Choudary notes in his analysis, the real problem with simplistic AI adoption narratives is that they direct attention to the individual task level when the real shift is happening at the level of the entire system of work. Most marketing leaders are stuck in workflow optimization mode, building impressive fortifications against the wrong threat. They’re asking “How do we make our existing workflows more efficient with AI?” when they should be asking “What workflows should exist when AI can decide?”

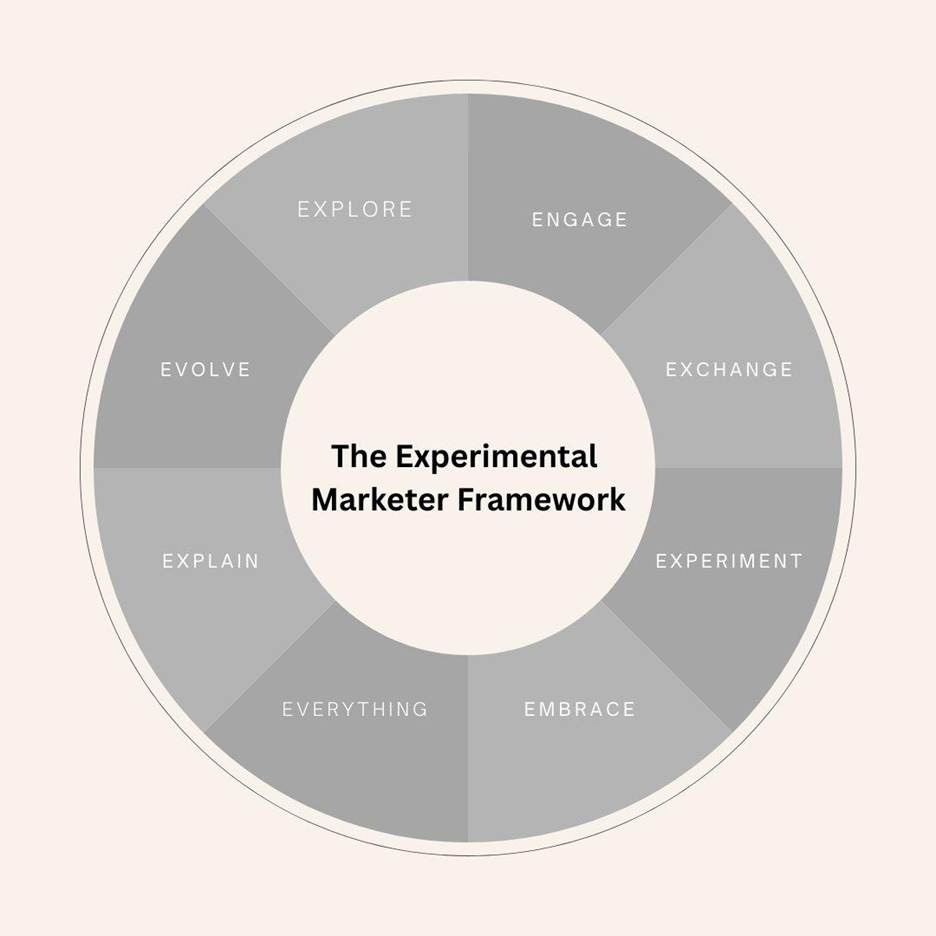

The Experimental Marketer Framework: A Marketer’s Blueprint for Rethinking

So if optimization isn’t enough, what is? You need a systematic approach to reimagining how marketing work gets organized around AI decisioning. That’s where the Experimental Marketer Framework™ comes in.

This framework consists of eight interconnected principles called the 8 Es: Explore, Engage, Exchange, Experiment, Embrace, Everything, Explain, and Evolve. These aren’t steps in a process or boxes to check, but rather a methodology for building marketers (and marketing organizations) that can thrive when machines make an increasing number of decisions about customer engagement.

Let me walk through how each principle guides you toward redesigning your workflows for the age of agentic AI, not just fixing them.

The Experimental Marketer Framework

1. Explore: Map Decision Points, Not Just Data Points

Traditional exploration in marketing means auditing your platforms, data sources, and customer touchpoints. That’s valuable, but insufficient for agentic AI.

The redesign prompt: Don’t map existing platforms. Map decision points where AI could autonomously act.

Instead of asking “What customer data do we have?”, ask “Where are humans currently making repetitive decisions that an agent could own?” Look for patterns in how your team decides what offer to present, which channel to use, what timing is optimal, or how to respond to customer signals.

Workflow implication: Your exploration becomes about discovering decisioning opportunities, not just completing data audits. You’re identifying where in the customer journey autonomous agents should have decision authority (under human-made strategy), and what information they need to decide well.

2. Engage: Build Coalitions Around New Authority Structures

Getting stakeholder buy-in for a new marketing tool is one thing, but rebuilding organizational authority around machine decisioning is entirely different.

The redesign prompt: Build coalitions around new authority structures, not just project buy-in.

When AI agents make autonomous decisions about customer engagement, fundamental questions emerge: Who owns accountability when an agent makes a decision that underperforms or offends? Who reviews agent decision quality? Under what circumstances should humans intervene? How do we balance agent autonomy with brand safety?

These aren’t IT questions or marketing questions. They’re organizational design questions. You need Legal to define acceptable risk boundaries, Finance to establish decision value thresholds, IT to ensure compliance and auditability, and Marketing/Data Ops to design governance frameworks.

Workflow implication: Engagement becomes about designing governance models that assume machine decisioning from the start, with ethical checkpoints defined (and reviewed) by humans, not retrofitting approval loops designed for human decision-makers onto autonomous systems.

3. Exchange: Design for Continuous Agent Learning

Most marketing technology stacks are built around static rule engines: “If customer does X, then send Y.” Even sophisticated decisioning engines often rely on rules that humans periodically update.

The redesign prompt: Design for continuous agent learning, not static rule engines.

In traditional workflows, data flows from systems to humans who analyze it, make decisions, and program those decisions back into tools. In redesigned workflows, data flows continuously to agents who decide in real-time, with human-agent feedback loops that improve decision quality over time.

This means your data infrastructure needs to support not just reporting and segmentation, but real-time decisioning and actioning. Your customer data platform (or wherever customer data unification happens) isn’t just a source of truth anymore, but also an input to autonomous decision systems. Your campaign metrics aren’t just performance dashboards, also training data for agent improvement.

Workflow implication: You move from scheduled campaign logic (plan, build, launch, measure) to real-time decisioning architectures where agents continuously sense, decide, act, and learn.

4. Experiment: Test Agent Decision Quality, Not Just Campaign Performance

A/B testing emails is experimentation at the task level. When you deploy agentic AI, experimentation needs can happen at the system level.

The redesign prompt: Test agent decision quality, not just campaign performance.

What you’re really optimizing is agent judgment under different conditions. Can the agent properly weight recency versus frequency signals? Does it handle seasonal patterns appropriately? Can it identify when a customer is in research mode versus purchase mode? Does it respect fatigue signals?

This requires a different experimental mindset. You’re not testing “which message performs better” but rather “how well does this agent decide what message to send, and under what conditions does its judgment break down?”

Workflow implication: Experimentation becomes continuous agent improvement rather than discrete campaign A/B tests. You build systems that let agents experiment within guardrails, learn from outcomes, and autonomously improve their decisioning over time.

5. Embrace: Build for Agent Evolution, Not Human Adaptation

Every few years, marketers face a new platform shift: mobile, social, voice, now AI. The typical response is training programs to help humans adapt to new tools.

The redesign prompt: This time, we should build for agent evolution, not human adaptation.

Here’s what makes agentic AI different: it gets better independently of human skill development. As AI models improve, your agent can make increasingly sophisticated decisions without retraining your team, but only if your workflows are designed to accommodate increasing agent autonomy.

This means building flexible governance frameworks that can scale agent authority as capability grows. When agents are limited, they might operate only within narrow guardrails. As they improve, you should be able to expand their decision authority without needing to restructure your entire operation.

Workflow implication: You design adaptive governance systems that can expand agent autonomy as trust and capability increase (along with human supervision), rather than static rules that assume constant agent limitation.

6. Everything: Measure System-Level Outcomes, Not Task-Level Efficiency

This is where most AI implementations may reveal their task-level thinking. Teams measure “time saved writing emails” or “number of variations generated” or “reduction in manual work.”

The redesign prompt: Measure system-level outcomes, not task-level efficiency.

When agents make autonomous decisions about customer engagement, you need to measure decision quality and business impact: Are agents improving customer experience? Are they identifying high-propensity moments more accurately than rule-based systems? Are they balancing short-term conversion with long-term relationship building?

This requires measurement frameworks that connect agent decisions to business outcomes across the entire customer journey, not just within individual campaigns or channels. Components of these frameworks typically include a model for agent-driven engagement measurement, decision quality scorecards that evaluate judgment under different conditions, business impact dashboards, retention, and conversion influenced by agent decisions, and feedback mechanisms where customer responses inform agent learning.

Workflow implication: You build internal attribution models that capture agent contribution to business outcomes, with visibility into how agent decisions impact metrics around acquisition, retention, expansion, and advocacy over time.

7. Explain: Communicate the New Decision Architecture

When you implement a new marketing tool, you explain its features and benefits. When you implement agentic decisioning, you’re changing fundamental assumptions about how your marketing organization works.

The redesign prompt: Communicate the new decision architecture, not just new tools.

Stakeholders across your organization need to understand who decides (agents versus humans), what agents can decide autonomously, how agent decisions get made, and why this structure benefits both customers and the business.

This transparency isn’t just about change management. It’s about building organizational trust in machine decisioning. When a CEO asks “Why did we send that message to our top customer?”, you need to explain agent logic, not just tool features.

Workflow implication: Marketers should create decision visibility systems that make agent reasoning transparent, with clear documentation of decision frameworks, escalation protocols, and human override processes.

8. Evolve: Develop System Design Skills, Not Just Tool Proficiency

As agentic AI reshapes marketing workflows, it also reshapes marketing careers. The question isn’t whether your job will change, but whether you’re building capabilities that remain valuable as this change accelerates.

The redesign prompt: Develop system design skills, not just tool proficiency.

Task-specific skills become obsolete quickly in the age of AI. If your value proposition is “I’m great at email segmentation” or “I know how to use Platform X,” you may be building on sand. Instead, develop capabilities that remain valuable regardless of which tools dominate: understanding customer behavior and motivations, designing decision frameworks, interpreting business outcomes, leading cross-functional teams, and architecting systems.

As AI commoditizes routine knowledge work, value appears to shift to judgment, curation, and contextual understanding. The marketers who position themselves most strategically appear to be those developing capabilities in system design, agent governance frameworks, and cross-functional orchestration rather than platform-specific execution skills.

Workflow implication: Your professional development should focus on becoming a marketing systems architect rather than a marketing tools operator. You can invest in learning how to design governance frameworks, build experimentation methodologies, and translate technical capabilities into strategic advantage.

What This Actually Looks Like in Practice

Let me contrast old versus redesigned workflows to make this concrete:

Old workflow (optimized with AI): Marketer segments audience based on quarterly analysis, schedules campaign for next week, AI generates personalized subject lines, campaign launches on schedule, team measures open rates and clicks, insights inform next quarterly planning cycle.

Redesigned workflow (8 Es applied): Agent continuously scans customer signals and behavioral patterns (Explore), makes real-time decisions about optimal message, timing, channel, and content based on individual context (Exchange/Experiment), executes autonomously within governance guardrails defined by cross-functional team (Engage), learns from every interaction to improve future decisions (Embrace), surfaces strategic patterns and business impact to human strategists (Everything), provides transparent decision explanations when stakeholders inquire (Explain), while marketers focus on designing better decision frameworks and building strategic capabilities (Evolve).

Notice the fundamental difference: In the first scenario, humans orchestrate campaigns with AI assistance. In the second, agents orchestrate engagement with human oversight. The human role shifts from campaign manager to system architect.

The first workflow asks “How can AI make my campaigns better?” The second asks “What should marketing look like when AI can decide?”

The Choice Ahead

The temptation of optimization in the corporate world is understandable. It feels productive. It requires less organizational upheaval. You can point to clear efficiency gains and quick wins.

But here’s what Choudary warns about in his analysis of AI transformation: organizations don’t fail at AI adoption because they don’t adopt AI, but because they use it solely to optimize within an older frame.

Agentic AI and AI decisioning appear positioned to restack marketing work whether marketers deliberately plan for it or allow it to happen reactively. The question is whether you’ll deliberately design the future system using frameworks like the 8 Es, or whether you’ll wake up one day to discover that piecemeal optimization has created a Frankenstein workflow that nobody fully understands or controls.

The organizations that thrive won’t be those with the most optimized workflows, but those who recognized that AI decisioning requires fundamentally rethinking how marketing work gets organized, governed, and measured.

So before launching another initiative to “fix your marketing workflows,” ask yourself: Should this workflow exist at all in a world where AI can decide?

The answer might just reshape your entire marketing operation.